Table of Contents

ToggleHistory of Artificial Intelligence: From Its Invention to Modern Development

The history of artificial intelligence (AI) is a fascinating journey that shows how far technology has evolved in just a few decades. Initially, the idea of AI was born in the 1950s, when pioneers such as Alan Turing and John McCarthy imagined machines that could think like humans. At that time, research was mostly theoretical, but it laid the foundation for what was to come.

During the 1960s and 1970s, AI gained momentum as researchers developed early programs capable of solving logical problems and playing simple games. However, limited computing power slowed progress, leading to what became known as the first “AI winter,” a period of reduced funding and optimism.

In the 1980s, expert systems emerged, bringing AI back into the spotlight. Moreover, industries began using these systems for decision-making in medicine and business. Nevertheless, the hype eventually faded again due to high costs and technical limitations.

The 1990s and 2000s marked a turning point. With more powerful computers and the rise of the internet, AI research accelerated. As a result, machine learning and neural networks became more practical. This progress led to famous achievements, such as IBM’s Deep Blue defeating chess champion Garry Kasparov in 1997.

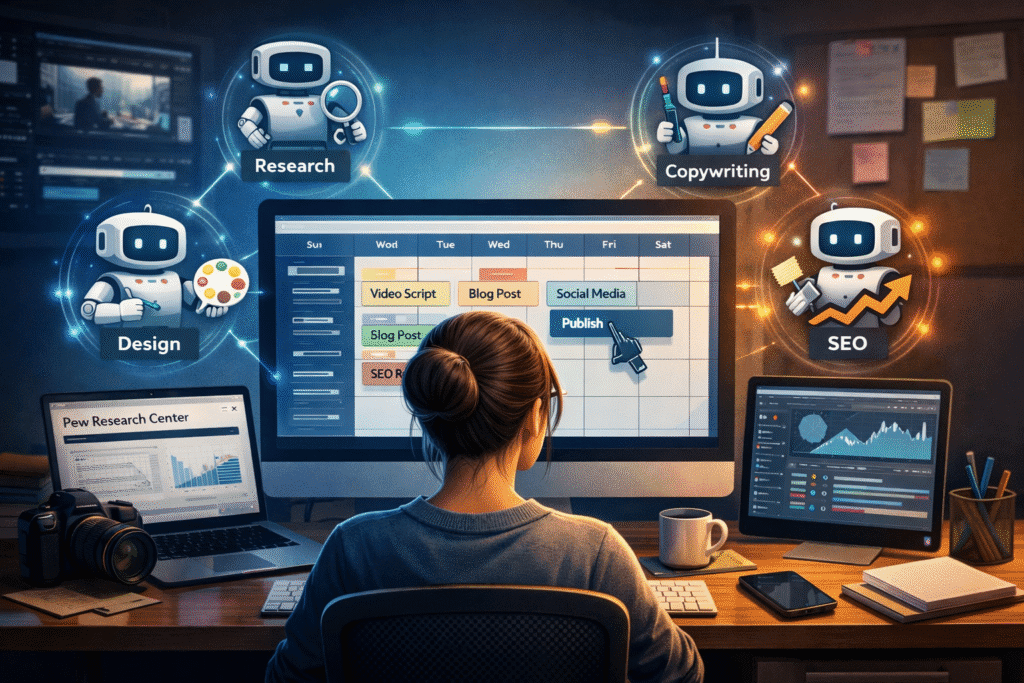

In the last decade, AI has grown faster than ever. Thanks to big data, cloud computing, and advances in algorithms, technologies such as natural language processing, computer vision, and generative AI have become part of everyday life. Today, AI supports bloggers, creators, and small businesses by automating tasks, improving productivity, and opening new opportunities.

In conclusion, the history of artificial intelligence proves that innovation often follows cycles of success and setbacks. Now, AI is no longer just a concept; it is a practical tool shaping industries worldwide.

Free Gift

Download 10 Smart Prompts That Make ChatGPT Give You Better Results

Copy, paste, and get better results instantly.